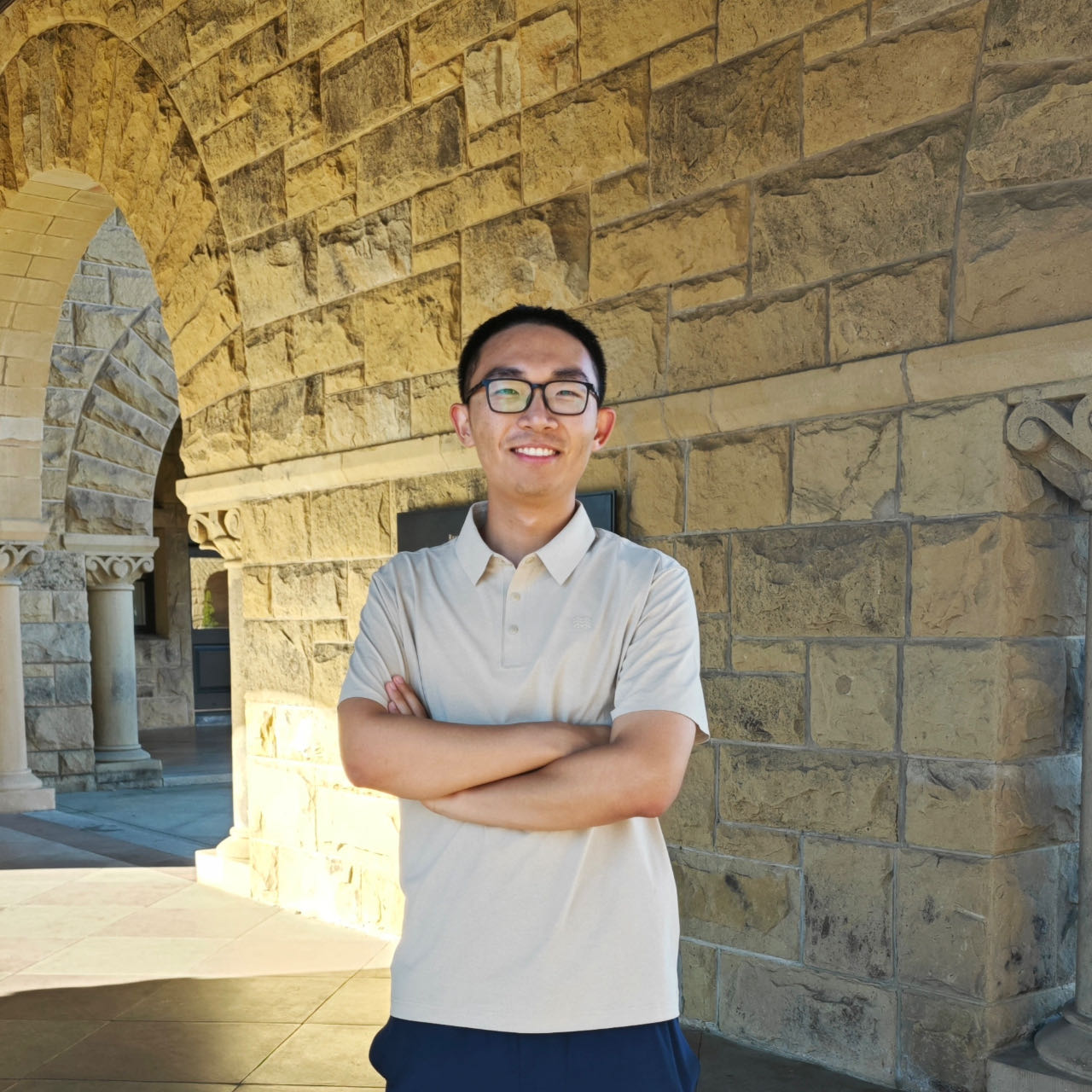

Genghan Zhang

|

PhD Student Department of Computer Science Stanford University Office: 478 Gates zgh23 [at] stanford [dot] edu Curriculum Vitae |

I am a computer science PhD student at Stanford University advised by Professor Kunle Olukotun. I also worked with Professor Fredrik Kjolstad on sparse tensor algebra compilation and Professor Azalia Mirhoseini on efficient sparse large language models.

Research: My research interests lie in better programming models and systems for domain-specific architectures. I am also interested in optimizing GPU kernels for emerging applications, including sparse and recurrent neural networks.

I graduated from Tsinghua University in 2023 with a bachelor degree in Eletronic Engineering. At Tsinghua, I did research at NICS-EFC Lab on effcient sparse tensor algebra for GPU and IDEAL Lab on kernel architecture search.

Selected Publications

Blogs

Talks

|

Self-improving LLM Agentic Systems for Programming AI Accelerators November 2025 UCSD MLSys Seminar | |

|

Adaptive Self-improvement LLM Agentic System for ML Library Development June 2025 Stanford SEAMS Retreat | |

|

Compilation of Modular and General Sparse Workspaces April 2025 Stanford Software Research Lunch | |

|

Adaptive Self-improvement LLM Agentic System for ML Library Development April 2025 Uber Programming Systems Group (PSG) Invited Talk Series |

Teaching

|

CS 149: Parallel Computing Autumn 2025, Stanford University |