My undergraduate research projects

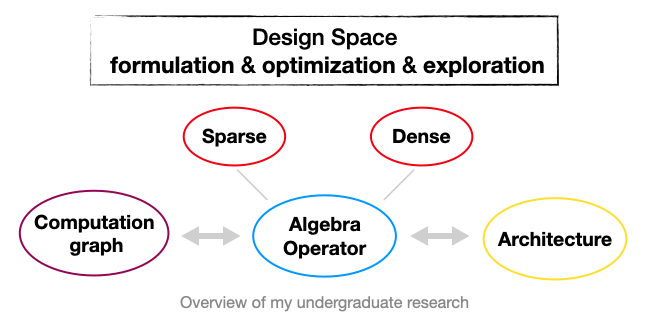

I completed three research projects with professors from Stanford University, UC Irvine, and Tsinghua University. Each of them explored one aspect of tensor algebra design space. In Sparse Workspace project, I formulated new design space: introducing new discordant accumulation operations into sparse compilers. In KAS, I developed new space exploration techniques for dense computation graphs of neural networks. Finally, in Sgap, I optimized a single point in the space: sparse dense hybrid operators on GPU.

Sparse Workspace

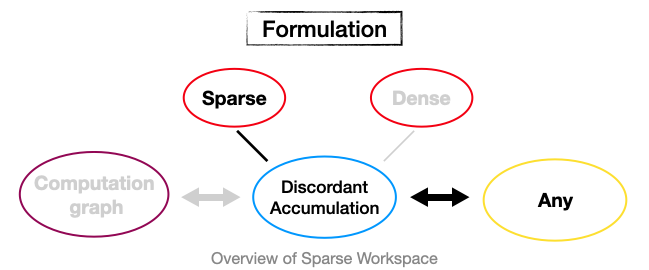

I formulated new design space: introducing new discordant accumulation operations into sparse compilers. I observed that current algorithm could not correctly infer reduction operators. Therefore, I proposed five logic rules called Forall Context, which determined the reduction operator for any workspace expression. This technique has been integrated into TACO. We observed the discordant accumulation problem. That said, the current algorithm can only handle concordant point streams and allocates a large amount of memory unnecessarily. Observing this phenomenon, I designed a new algorithm called sparse workspace. Sparse workspace aims to be a flexible sparse compilation algorithm that handles discordant or concordant point streams with intermediate memory cost controlled by users. This work is supervised by Professor Fredrik Kjostad. A technical report for this project can be found here.

Kernel Architecture Search

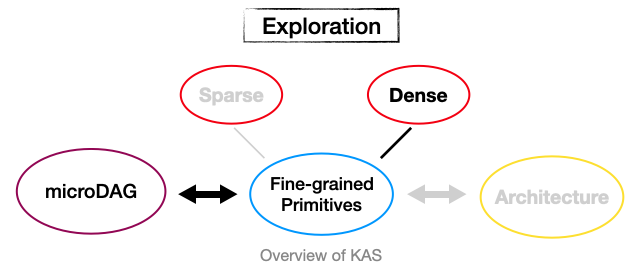

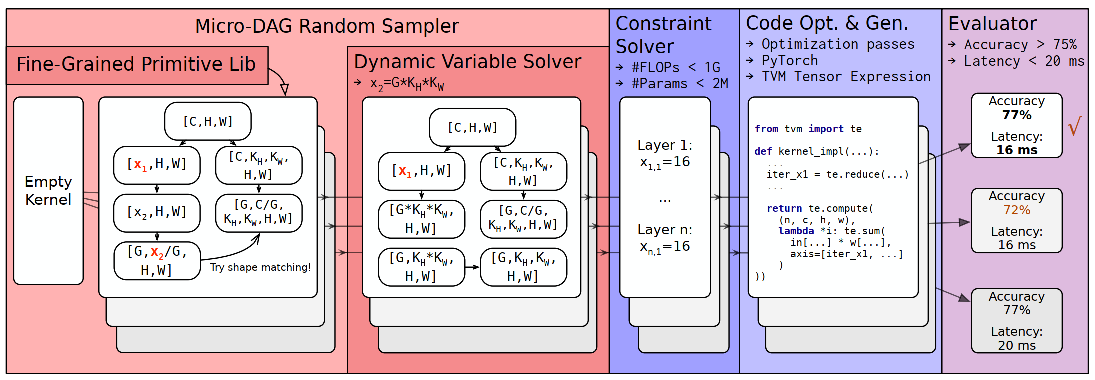

I developed new space exploration techniques for dense computation graphs of tensor programs. We proposed Kernel Architecture Search (KAS) to search for efficient computation graphs with the highest classification accuracy within the same hardware budgets. We implemented a system Canvas to examine our KAS idea. I optimized the graph generation algorithm of Canvas reduced the sampling time from seconds to milliseconds. KAS finds network designs with up to 1.5x inference speedups over previous state-of-the-art with acceptable accuracy loss. This work is supervised by Professor Mingyu Gao. We submitted the paper to MLSys 2023, for which I am the second author. This paper is still under review.

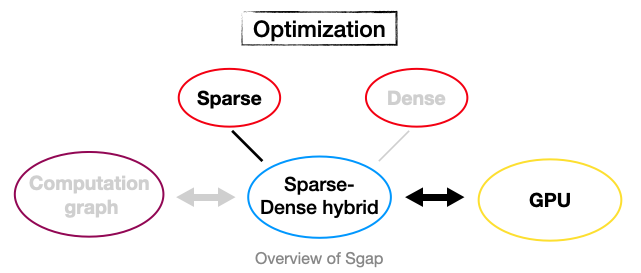

Sgap

I optimized a single point in the space: sparse dense hybrid operators on GPU. We observed that GPU performs better on regular data, whose benefits can remedy possible redundant computation. With the help of this trait, I developed atomic parallelism to accelerate SpMM GPU kernels, and observed a 1.6x~2.3x increase in speed. Such optimization has been integrated into dgSPARSE. Then I generalized atomic parallelism as a sparse compiler technique called segment group. Equipped with segment group, the sparse compiler can generate sparse GPU kernels 1.2x faster than before. This work is jointly supervised by Professor Yu Wang and Professor Sitao Huang. We submitted Sgap (segment group and atomic parallelism) to CCF Transactions on High Performance Computing. I am the first author for this publication.